AI Agents as Negotiators. An Experiment.

Could an AI Agent get you a better deal in a negotiation?

Could an AI Agent get you a better deal in a negotiation?

AI Agents are an exciting application of Large Language Models and they promise to revolutionize the way we interact with software and other people. Other than automating existing processes with known plans, like buying an airline ticket for the user, a new and potentially valuable application would be to use them as planning agents which chart their own course towards the final goal.

In this article we will explore using two AI agents to perform an essential and eminently human task: negotiating. The goals of the experiment are to study:

We have crafted two agents with almost identical prompts, the Seller and the Buyer. The object of the transaction is an Egg 🥚.

The Seller has a minimum price under which they will not sell. Likewise the Buyer has a maximum price over which they will not buy.

One of the two will start the transaction and our system will pass the messages back and forth between the two. When any of them detects that final price has been agreed-upon, they output EXIT and a summary of the prices, which notifies our system to exit.

Technically:

In short, yes. As you can see in the following example execution, the 2 agents try to maximize their profit. In other words, the Seller’s asking price tends to be higher than the Buyer’s last bidding price. Likewise, the Buyer’s bidding price tends to be lower than the Seller’s last asking price. And, after a number of steps the 2 agents cordially agree to a price somewhere between the limit prices that each of them has.

Remember, the limit prices are private, not part of a common prompt, and as you can see from the conversation, there is no exchange of this information.

In short, the two agents perform similarly to how you’d expect two rational economic agents to perform in a negotiation.

If you want to play with it, you can access it here. If you use your Gemini API key you can run it on a Google Colab notebook. Otherwise, you can play with it locally with an Ollama-run LLM.

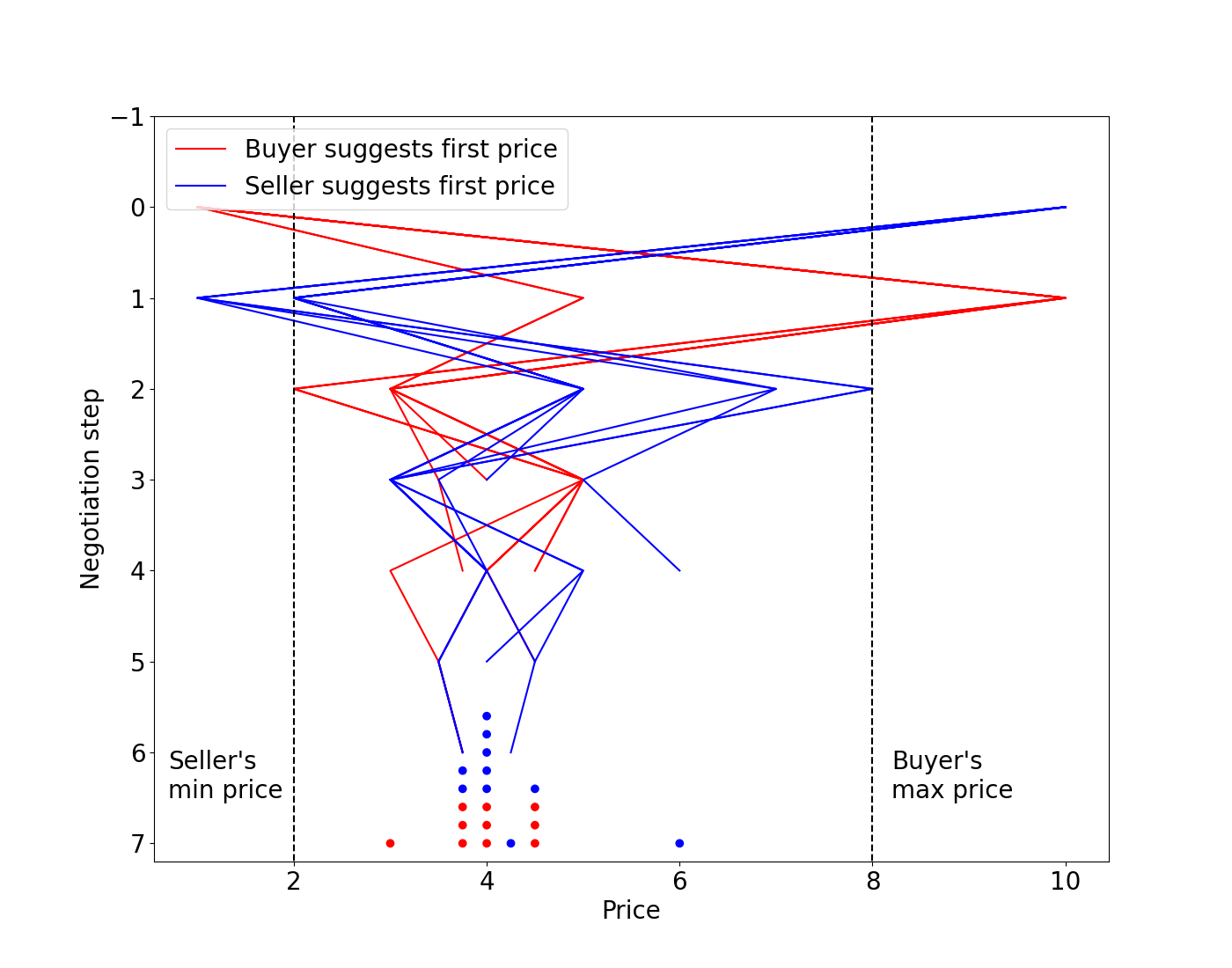

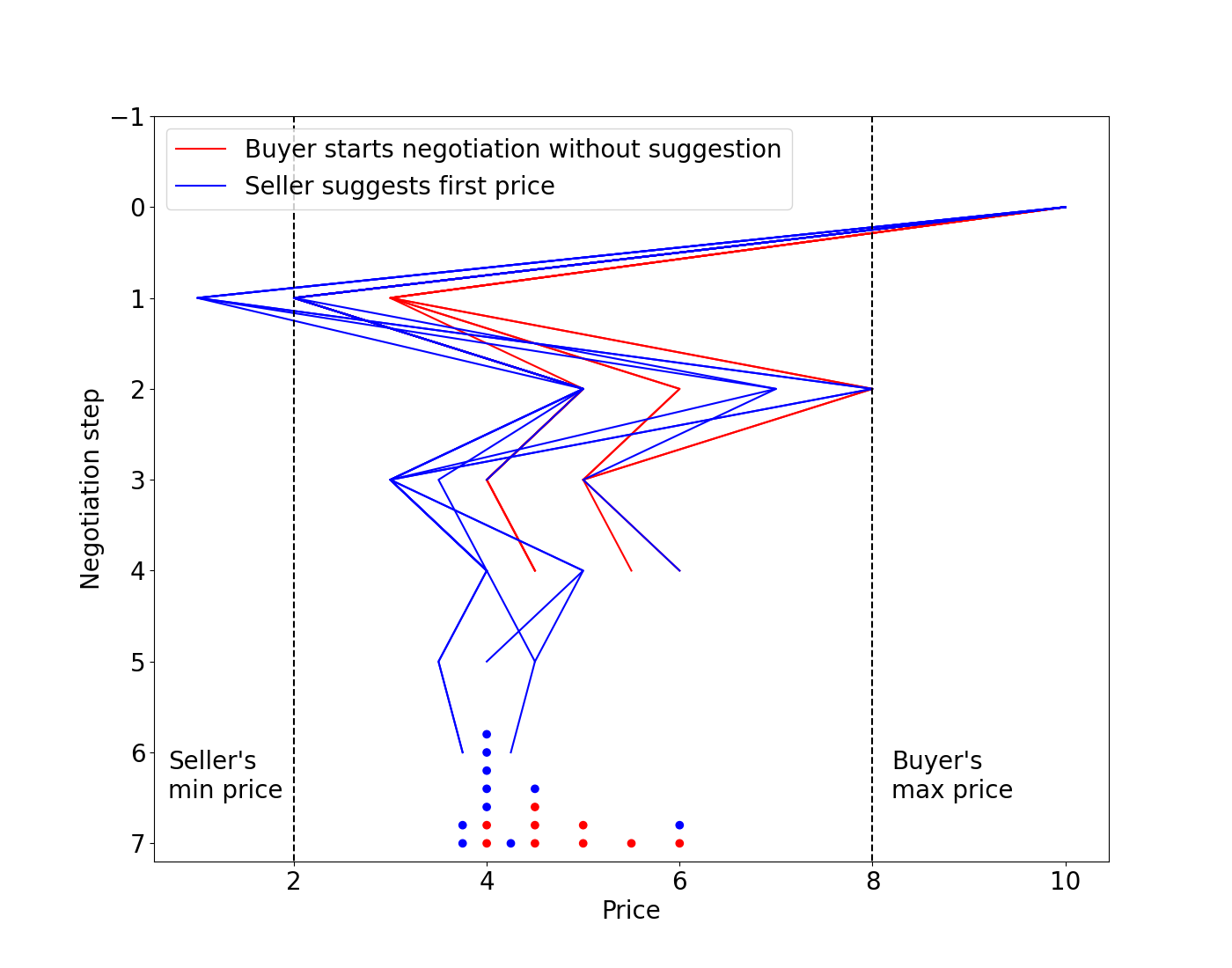

Having set up our experiment, let’s run it multiple times and compare the results under different conditions. We will consider 2 variables:

We’ve chosen these 2 variables because we know from behavioral economics that whoever sets the first price, called the anchor, greatly influences the outcome of a negotiation.

As we can see from the charts below, proposing the first price has a greater influence on the final price than starting the conversation. The Buyer seems to always start with a price of 1 ( thankfully not 0, what kind of an offer would that be) and the Seller with a price of $10. The following evolution of the price depends only on the whims of the LLM’s setting (temperature, sampling of the next token, etc.), as the prompts are always the same.

Suggesting the price matters

Starting the conversation matters less

Comparing the distribution of the final price for the 4 scenarios, we have a few interesting observations:

For this article we have examined only 2 possible variables that can influence the final price, but there are many more experiments that we could try:

During our initial experiments we also observed unexpected behaviours, as willingless from the part of the Seller to lower the price in exchange for buying more than one 🥚. We explicitely prohibited that in the prompt, but it would also be interesting exploring upselling strategies.

Difficult to say, as this is just a short and fun experiment. But there are pros and cons.

First of all, unlike human negotiators, agents are not prone to emotions and sentimental thinking, thus following a preset strategy more closely. However they may be influenced by subtle cues from the counterparty, and this needs further investigation.

Secondly, agents can do this at scale, with thousands of counterparties at once, opening up new applications and opportunities.

However the cons are also considerable. Mainly, this is still finnicky technology; you would need pretty hard guardrails and a strong contract so you don’t find yourself at a (potentially big) loss.

We’ve done a simple experiment that shows that negotiating agents exhibit rational economic behavior. We have also shown that the final price (and profit) is influenced by different variables. In particular, as in classic behavior economic results, anchoring the price gives negotiators a big advantage.

If you want to give it a try or experiment with it, the code is available here.